In 2015 the Cambridge Research Data Team organised several discussions between funders and researchers. In May 2015 we hosted Ben Ryan from EPSRC, which was followed by a discussion with Michael Ball from BBSRC in August. Now we have invited our two main charity funders to discuss their views on data management and sharing with Cambridge researchers.

David Carr from the Wellcome Trust and Jamie Enoch from Cancer Research UK (CRUK) met with our academics on Friday 22 January at the Gurdon Institute. The Gurdon Institute was founded jointly by the Wellcome Trust and CRUK to promote research in the areas of developmental biology and cancer biology, and to foster a collaborative environment for independent research groups with diverse but complementary interests.

This blog summarises the presentations and discusses the data sharing expectations from Wellcome Trust and CRUK. A second related blog ‘In conversation with Wellcome Trust and CRUK‘ summarises the question and answer session that was held with a group of researchers on the same day.

Wellcome Trust’s requirements for data management and sharing

Sharing research data is key for Wellcome’s goal of improving health

David Carr started his presentation explaining that the Wellcome Trust’s mission is to support research with the goal of improving health. Therefore, the Trust is committed to ensuring research outputs (including research data) can be accessed and used in ways that will maximise health and societal benefits. David reminded the audience of benefits of data sharing. Data which is shared has the potential to:

- Enable validity and reproducibility of research findings to be assessed

- Increase the visibility and use of research findings

- Enable research outputs to be used to answer new questions

- Reduce duplication and waste

- Enable access to data to other key communities – public, policymakers, healthcare professionals etc.

Data sharing goes mainstream

David gave on overview of data sharing expectations from various angles. He started by referring to the Royal Society’s report from 2012: Science as an open enterprise, which sets sharing as the standard for doing science. He then also mentioned other initiatives like the G8 Science Ministers’ statement, the joint report from the Academy of Medical Sciences, BBSRC, MRC and Wellcome Trust on reproducibility and reliability of biomedical research and the UK Concordat on Open Research Data with a take-home message that sharing data and other research outputs is increasingly becoming a global expectation, and a core element of good research practice.

Wellcome Trust’s policy for open data

The next aspect of David’s presentation was Wellcome Trust’s policy on data management and sharing. The policy was first published almost a decade ago (2007) with subsequent modifications in 2010. The principle of the policy is simple: research data should be shared and preserved in a manner which maximises its value to advance research and improve health. Wellcome Trust also requires data management plans as a compulsory part of grant applications, where the proposed research is likely to generate a dataset that will have significant value to researchers and other users. This is to ensure that researchers understand the importance of data management and sharing and to plan for it from the start their projects.

Cost of data sharing

Planning for data management and sharing involves costing for these activities in the grant proposal. The Wellcome Trust’s FAQ guidance on data sharing policy says that: “The Trust considers that timely and appropriate data management and sharing should represent an integral component of the research process. Applicants may therefore include any costs associated with their proposed approach as part of their proposal.” David then outlined the types of costs that can be included in grant applications (including for dedicated staff, hardware and software, and data access costs). He noted that in the current draft guidance on costing for data management estimated costs for long-term preservation that extend beyond the lifetime of the grant are not eligible, although costs associated with the deposition of data in recognised data repositories can be requested.

Key priorities and emerging areas in data management and sharing

Infrastructure

The Wellcome Trust also identified key priorities and emerging areas where work needs to be done to better support of data management and sharing. The first one was to provide resources and platforms for data sharing and access. David pointed out that wherever available, discipline-specific data repositories are the best home for research data, as they provide rich metadata standards, community curation and better discoverability of datasets.

However, the sustainability of discipline-specific repositories is sometimes uncertain. Discipline-specific resources are often perceived as ‘free’. However, research data submitted to ‘free’ data repositories has to be stored somewhere and the amount of data produced and shared is growing exponentially – someone has to pay for the cost of storage and long-term curation in discipline-specific data repositories. An additional point for consideration is that many disciplines do not have their own repositories and therefore need to heavily rely on institutional support.

Access

Wellcome Trust funds a large number of projects in clinical areas. Dealing with patient data requires careful ethical considerations and planning from the very start of the project to ensure that data can be successfully shared at the end of the project. To support researchers in dealing with patient data The Expert Advisory Group on Data Access (a cross-funder advisory body established by MRC, ESRC, Cancer Research UK and the Wellcome Trust) has developed guidance documents and practice papers about handling of sensitive data: how to ask for informed consent, how to anonymise data and the procedures that need to be in place when granting access to data. David stressed that balance needs to be struck between maximising the use of data and the need to safeguard research participants.

Incentives for sharing

Finally, if sharing is to become the normal thing to do, researchers need incentives to do so. Wellcome Trust is keen to work with others to ensure that researchers who generate and share datasets of value receive appropriate recognition for their efforts. A recent report from the Expert Advisory Group on Data Access proposed several recommendations to incentivise data sharing, with specific roles for funders, research leaders, institutions and publishers. Additionally, in order to promote data re-use, the Wellcome Trust joined forces with the National Institutes of Health and the Howard Hughes Medical Institute and launched the Open Science Prize competition to encourage prototyping and development of services, tools or platforms that enable open content.

Cancer Research UK’s views on data sharing

The next talk was by Jamie Enoch from Cancer Research UK. Jamie started by saying that because Cancer Research UK (CRUK) is a charity funded by the public, it needs to ensure it makes the most of its funded research: sharing research data is elemental to this. Making the most of the data generated through CRUK grants could help accelerate progress towards the charity’s aim in its research strategy, to see three quarters of people surviving cancer by 2034. Jamie explained that his post – Research Funding Manager (Data) – has been created as a reflection of data sharing being increasingly important for CRUK.

The policy

Jamie started talking about the key principles of CRUK data sharing policy by presenting the main issues around research data sharing and explaining the CRUK’s position in relation to them:

- What needs to be shared? All research data, including unpublished data, source code, databases etc, if it is feasible and safe to do so. CRUK is especially keen to ensure that data underpinning publications is made available for sharing.

- Metadata: Researchers should adhere to community standards/minimum information guidelines where these exist.

- Discoverability: Groups should be proactive in communicating the contents of their datasets and showcasing the data available for sharing

Jamie explained that CRUK really wants to increase the discoverability of data. For example, clinical trials units should ideally provide information on their websites about the data they generate and clear information about how it can be accessed.

- Modes of sharing: Via community or generalist repositories, under the auspices of the PI or a combination of methods

Jamie explained that not all data can be/should be made openly available. Due to ethical considerations sometimes access to data will have to be restricted. Jamie explained that as long as restrictions are justified, it is entirely appropriate to use them. However, if access to data is restricted, the conditions on which access will be granted should be considered at the project outset, and these conditions will have to be clearly outlined in metadata descriptions to ensure fair governance of access.

- Timeframes: Limited period of exclusive use permitted where justified

Jamie suggested adhering to community standards when thinking about any periods of exclusive use of generated research data. In some communities research data is made accessible at the time of publication. Other communities will expect data release at the time of generation (especially in collaborative genomics projects). Jamie further explained that particularly in cases where new data can affect policy development, it is key that research data is released as soon as possible.

- Preservation: Data to be retained for at least 5 years after grant end

- Acknowledgement: Secondary users of data should credit original researcher and CRUK

- Costs: Appropriately justified costs can be included in grant proposals

As of late 2015, financial support for data management and sharing can be requested as a running cost in grant applications. Jamie explained that there are no particular guidelines in place explaining eligible and non-eligible costs and that the most important aspect is whether the costs are well justified or not, and reasonable in the context of the research envisaged.

Jamie stressed that the key point of the CRUK policy is to facilitate data sharing and to engage with the research community, recognising the challenges of data sharing for different projects and the need to work through these collaboratively, rather than enforce the policy in a top-down fashion.

Policy implementation

Subsequently, the presentation discussed ways in which CRUK policy is implemented. Jamie explained that the main tool for the policy implementation is the new requirement for data management plans as compulsory part of grant applications.

Two of the three main response mode committees: Science Committee and Clinical Research Committee have a two-step process of writing a data management plan. During the grant application stage researchers need to write a short, free-form description about how they plan to adhere to CRUK’s policy on data sharing. Only if the grant is accepted, the beneficiary will be asked to write a more detailed data management plan, in consultation with CRUK representatives.

This approach serves two purposes as it:

- ensures that all applicants are aware of CRUK’s expectations on data sharing (they all need to write a short paragraph about data sharing)

- saves researchers’ time: only those applicants who were successful will have to provide a detailed data management plan, and it allows the CRUK office to engage with successful applicants on data sharing challenges and opportunities

In contrast, applicants for the other main CRUK response mode committee, the Population Research Committee, all fill out a detailed data management and sharing plan at application stage because of the critical importance of sharing data from cohort and epidemiological studies.

Outlooks for the future

Similarly to the Wellcome Trust, CRUK realised that cultural change is needed for sharing to become the normality. CRUK have initiated many national and international partnerships to help the reward of data sharing.

One of them is a collaboration with the YODA (Yale Open Data Access) project aiming to develop metrics to monitor and evaluate data sharing. Other areas of collaborative work include collaboration with other funders on development of guidelines on ethics of data management and sharing, platforms for data preservation and discoverability, procedures for working with population and clinical data. Jamie stressed that the key thing for CRUK is to work closely with researchers and research managers – to understand the challenges and work through these collaboratively, and consider exciting new initiatives to move the data sharing field forwards.

Links

- David Carr’s (Wellcome Trust) presentation

- Jamie Enoch’s (CRUK) presentation

Published 5 February 2016

Written by Dr Marta Teperek, verified by David Carr and Jamie Enoch

Rafael Carazo-Salas

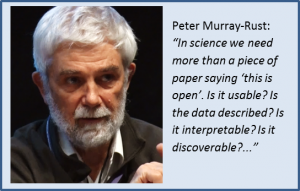

Rafael Carazo-Salas The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others.

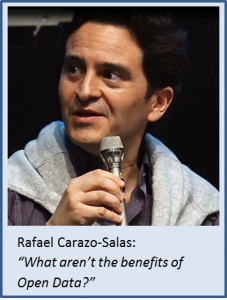

The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others. Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation.

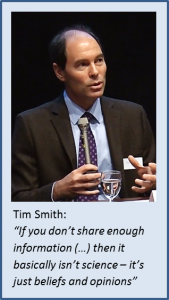

Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation. Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position.

Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position. Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions.

Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions. Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable.

Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable. The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.

The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.