At the heart of the University of Cambridge’s Open Access Policy is the commitment “to disseminating its research and scholarship as widely as possible to contribute to society”.

Behind this aim is the benefit to researchers worldwide, as the OA2020 vision has it, to “gain immediate, free and unrestricted access to all of the latest, peer-reviewed research”. It’s some irony indeed that the growth of the availability of research as open access does not automatically result, without further community investment, in a corresponding improvement in discoverability.

Key stakeholders met at the British Library to discuss the issue at the end of 2018 and produced an Open Access Discovery Roadmap , to identify areas of work in this space and encourage collaboration in the scholarly communications community.[1] A major theme included the dependence on reliable article licence metadata, but the main message was finding the open infrastructure/interoperability solutions for long-term sustainability “ensuring that the content remains accessible for future generations”.

New web pages on Open Access discovery

Recognizing where we are now, and responding to the present, (probably) partial awareness of the insufficiencies in the OA discovery landscape, Cambridge University Library has added pages to its e-resources website to highlight OA discovery tools and important websites indexing OA content. The motivations for highlighting the options for OA discovery on the new pages is described in this blog post. Our main aim is to bring to light search and discovery of OA as a live topic and prevent it “languishing in undiscoverable places rather than being in plain sight for everyone to find.”[2]

Recently, data from Unpaywall for July 2019 has been used to forecast for growth in availability of articles published as OA by 2025, predicting on the basis of current trends, but conservatively – without even taking full account of the impact of Plan S, for example. This forecast for 2025 predicts

- 44% of all journal articles will be available as OA

- 70% of article views will be to OA articles.[3]

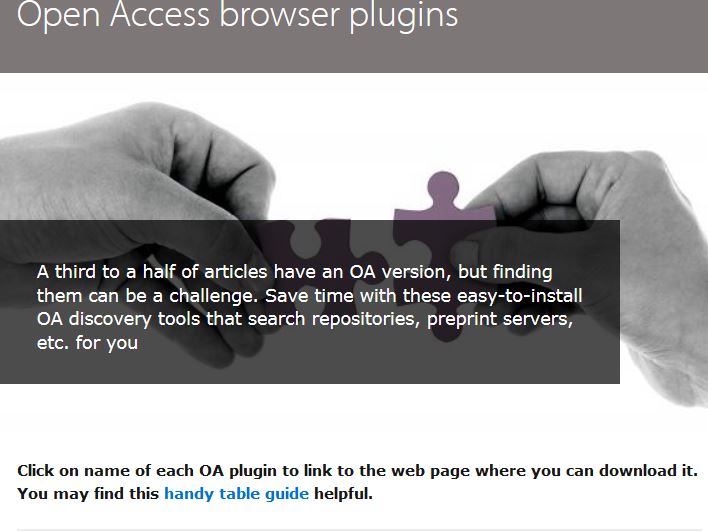

Unpaywall’s estimate for availability OA right now is 31%. A third (growing soon to a half) is a significant proportion for anyone’s money, and wanting to signal the shift we have used that statistic as our headline on the page summarizing the most well-known and commonly-used Open Access browser plugins.

We want the Cambridge researcher to know about these plugins and to be using them, and aim to give minimal but salient information for a selection of one, or several, to be made. Our recommendation is for the Lean Library extension “Library Access” but we have been in touch with Kopernio and QxMD and ensured that members of the University registering to use these plugins will also pick up the connection to our proxy server for seamless off campus access to subscription content where it exists, before the plugin offers an alternative OA version.

Once installed in the user’s browser, the plugin will use the DOI and/or a combination of article metadata elements to search the plugin’s database and multiple other data sources. A discreet, clickable pop-up icon will become live (change colour), on finding an OA article and will deliver the link or the PDF direct to the user’s desktop. Most plugins are compatible with most browsers, Lean’s Library Access adding compatibility with Safari last month.

Each plugin has a different history of development and certain features that distinguish it from others, and we’ve attempted to bring these out on the page. For example noting Unpaywall’s trustworthiness in the library space thanks to its exclusion of ResearchGate and Academia.edu; its harvesting and showing of licence metadata; and its reach with integrating search of its data via library discovery systems. Features we think are relevant for potential users looking for a quick overview of what’s out there are also mentioned, such as Kopernio’s Dropbox file storage benefits and integration with Web of Science and QxMD’s special applications for medical researchers and professionals.

In an adjacent page, Search Open Access, there is coverage of search engines focused on discovering OA content (Google Scholar; 1findr; Dimensions; CORE), a range of sites indexing OA content in different disciplines, both publisher- and community-based, and a selection of repositories and preprint servers, including OpenDOAR.

We hope the site design, based on the very cool Judge Business School Toolbox pages, gets across the basics about the OA plugins available and encourages their take-up. The plugins will definitely bring to the researcher OA alternative versions when subscription access puts the article behind a paywall and, regardless, will expose OA articles in search results that will otherwise be hard to find. The pages’ positioning top-left on the e-resources site is deliberately intended to grab attention, at least for reading left-to-right. It is interesting to see the approach other Universities have taken, using the LibGuide format for example at Queen’s University Belfast and at the University of Southampton.

Experiences with Lean Library’s Library Access plugin

Cambridge has had just over a year of experience implementing Lean Library’s Library Access plugin, and it’s been positive. The impetus for the institutional subscription to this product was as much to take action on the problem for the searcher of landing on publisher websites and struggling with Shibboleth federated sign-on. This problem is well documented (“spending hours of time to retrieve a minimal number of sources”) and most recently is being addressed by the RA21 project.[4] Equally though we wanted to promote OA content in the discovery process, and Lean Library’s latest development of its plugin to favour the delivery of the OA alternative before the default of the subscription version, is aligned with our values (considerations of versioning aside).

So we’re aiming to bring Lean to Cambridge researchers’ attention by recommending it as the plugin of choice for the period we’re in the transition to “immediate, free and unrestricted access” for all. It is only Lean that is providing the 24-hour updated and context-sensitive linking to our EZproxy server for off campus delivery of subscription content plus promoting OA alternative versions via the deployment of the Unpaywall database. The feedback from the Office of Scholarly Communication is favourable and the statistics support the positivity that we hear from our users (for the last year 66,731 for Google Scholar enhanced links; 49,556 article alternative views; a rough estimate against our EZproxy logs showing a probable 2/5 of off campus users are accessing the proxy via Lean).

One area of concern is the ownership of Lean by SAGE Publications, in contrast to the ownership say of Unpaywall as a project of the open-source ImpactStory, and what this means for users’ privacy. The concerns are shared by other libraries implementing Lean.[5] Our approach has been to make the extension’s privacy policy as prominent as possible on our page dedicated to promoting Lean, and to engage with Lean in depth over users’ concerns. We are satisfied with the answers to our questions from Lean and that our users’ data is adequately protected. Even in a rapidly changing arena for OA discovery tools the balance is not so fine when it comes to recommending installation of the Library Access plugin over a preference for the illegitimate and risk-prone SciHub.

Libraries’ discovery services are geared for subscription content

Allowing for influence of searchers’ discipline on choice of discovery service, it’s little surprise that the traditional library catalogue, even when upgraded to a web scale discovery service, prejudices inclusion of subscription over OA content. Of course it does, because this is the content the libraries pay for in the traditional subscription model and the discovery system is pretty much built around that. iDiscover is Cambridge’s discovery space for institutional subscriptions and print holdings of the University’s libraries and within iDiscover Open Access repository content has been enabled for search. Further, the pipe for the institutional repository content (Apollo) is established.

Nonetheless Cambridge will be looking to take advantage of the forthcoming link resolver service for Unpaywall. This is due for release in November 2019 and will surface a link to search Unpaywall from iDiscover when subscription content is unavailable. This link should kick in usually when the search in iDiscover is expanded beyond subscription content, and a form of which has been enabled already by at least one university by including the oadoi.org lookup in the Alma configuration.

The righting moment in the angle of list is that point a ship must find to keep it from capsizing, and Library discovery system providers’ integration with OA feels a bit like that – the OA indication was included in the May 2018 iDiscover release and suppliers have been working with CORE for inclusion of CORE content since 2017. That righting moment may be just over the horizon as integration with Unpaywall arrives, and the “competition” element dissipates, as the consultancy JISC used to review the OA discovery tools commented: “As the OA discovery landscape is crowded, OA discovery products compete for space and efficacy against established public infrastructure, library discovery services and commercial services”.[6]

A diffuse but developing landscape

Easy-to-install and effective to use, the OA discovery tools we are promoting are still widely thought of as at best providing a patch, a sticking-plaster, to the problem. A plethora of plugins is not necessarily what the researcher wants, or is attracted by, however necessary the plugin may be to saving time and exposing content in discovery. Possibly the really telling use case has yet to be tried wherein the plugin comes into its own in a big deal cancellation scenario.

Usage statistics for the Lean Library Access plugin are probably a reflection of the fact that the provision of most article content that is required by the University is available via IP access as subscription, and the need for the plugin is almost entirely limited to the off campus user. The Lean plugin’s relatively modest totals are though consistent with reports of plugin adoption by institutions that have cancelled big deals. The poll of the Bibsam Consortium members revealed 75% of researchers did not have any plug-in installed; the percentage for the University of Vienna in particular was 71%; the KTH Royal Institute of Technology authors “rarely used” a plugin.[7]

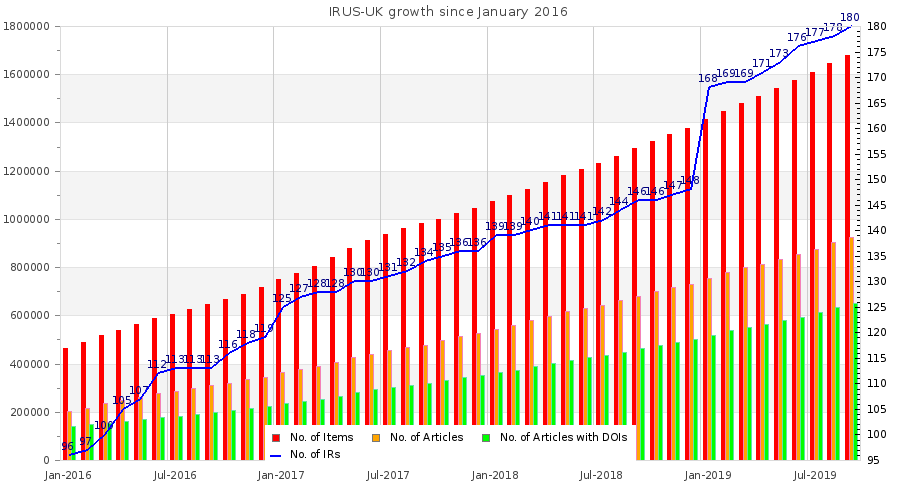

Another conjecture is that there is an antipathy to any plugin that could be collecting browsing history data and however “dumb” and programmatically-erased, the concern over privacy is such that the universal adoption libraries may hope for is unachievable. The likeliest explanation is possibly around the tipping-point from subscription to OA, and despite the Apollo repository’s usage being one of the highest in the country (1.1 million article downloads from July 2018 to July 2019), Cambridge’s reading of Gold OA is c. 13% of total subscription content, including journal archives. A comparison with the proportions of percentage views by OA types in Unpaywall’s recently published data (cited above) suggests this is on the low side in terms of worldwide trends, but it must be emphasized this is a subset of OA reading and excludes green, hybrid, and bronze. Just consider for instance the 1.5 billion downloads from arXiv globally to date.[8] Similarly, the stats from Unpaywall are overwhelmingly persuasive of the success of the plugin, as of February 2019 it delivered a million papers a day, 10 papers a second.

The inspirational statistician and “data artist” Edward Tufte wrote:

We thrive in information-thick worlds because of our marvellous and everyday capacities to select, edit, single out, structure, highlight, group, pair, merge, harmonize, synthesize, focus, organize, condense, reduce, boil down, choose, categorise, catalog, classify, list, abstract, scan, look into, idealize, isolate, discriminate, distinguish, screen, pigeonhole, pick over, sort, integrate, blend, inspect, filter, lump, skip, smooth, chunk, average, approximate, cluster, aggregate, outline, summarize, itemize, review, dip into, flip through, browse, glance into, leaf through, skim, refine, enumerate, glean, synopsize, winnow the wheat from the chaff, and separate the sheep from the goats.[9]

There’s thriving and there’s too much effort already. Any self-respecting OA plugin user will want to winnow, and make their own decisions on the plugin(s). In a less than 100% OA world, that combination of subscription and OA connection separated from physical location (on/off campus) is a critical advantage of the Lean Library offering, combined as it is with the Unpaywall database. Libraries will find much to critique in the institutional dashboards or analytics tools now built on top of some plugins (e.g. distinction of the physical location when accessing the alternative access version in the Kopernio usage for instance).

From the OA plugin user’s perspective, the emerging cutting edge is currently with the CORE Discovery plugin, as reported at the Open Repositories 2019 conference, in the “first large scale quantitative comparison” of Unpaywall, OA Button, CORE OA Discovery and Kopernio. This report reveals important truths for OA plugin critical adopters, for instance showing less than expected overlap in comparison of the plugins’ returned results from the test sample of DOIs, and the assertion “we can improve hit rate by combining the outputs from multiple discovery tools”.[10]

It’s become popular for our present day Johnson to quote his namesake, so in that vogue we should expect the take-up of Lean Library and CORE Discovery to bring closer that “resistless Day” when researchers the world over get “immediate, free and unrestricted access to all of the latest, peer-reviewed research” and the “misty Doubt” over the OA discovery landscape will be lifted.[11]

[1] Flanagan, D. (2018). Open Access Discovery Workshop at the British Library, Living Knowledge blog 18 December 2018. DOI: https://dx.doi.org/10.22020/v652-2876

[2] Fahmy, S. (2019). Perspectives on the open access discovery landscape, JISC scholarly communications blog. https://scholarlycommunications.jiscinvolve.org/wp/2019/04/24/perspectives-on-the-open-access-discovery-landscape/

[3] Piwowar, H., Priem, J. & Orr, R. (2019). The future of OA: a large-scale analysis projecting Open Access publication and readership. DOI: https://www.biorxiv.org/content/10.1101/795310v1

[4] Hinchliffe, L. Janicke. (2018). What will you do when they come for your proxy server?, Scholarly Kitchen blog. https://scholarlykitchen.sspnet.org/2018/01/16/what-will-you-do-when-they-come-for-your-proxy-server-ra21/

[5] Ferguson, C. (2019). Leaning into browser extensions, Serials Review, v. 45, issue 1-2, p. 48-53.

[6] Fahamy, S. (2019). Perspectives on the open access discovery landscape. JISC Scholarly Communications blog. https://scholarlycommunications.jiscinvolve.org/wp/2019/04/24/perspectives-on-the-open-access-discovery-landscape/

[7] See the presentations from the LIBER 2019 conference on zenodo here https://zenodo.org/record/3259809#.XaA0Qr57lhF and here https://zenodo.org/record/3260301#.XaAz6757lhF

[8] arXiv monthly download rates, https://arxiv.org/stats/monthly_downloads

[9] Tufte, E. Envisioning information, Cheshire, Connecticut, Graphics Press, p. 50.

[10] Knoth, P. (2019). Analysing the performance of open access discovery tools, OR 2019, Hamburg, Germany. https://www.slideshare.net/petrknoth/analysing-the-performance-of-open-access-papers-discovery-tools

[11] Johnson, S., In Eliot, T. S., Etchells, F., Macdonald, H., Johnson, S., & Chiswick Press,. (1930). London: a poem: And The vanity of human wishes. London: Frederick Etchells & Hugh Macdonald. l. 146.

Published Monday 21 October 2019

Written by James Caudwell (Deputy Head of Periodicals & Electronic Subscriptions Manager, Cambridge University Library)