This is the second in a blog series about why we need to move towards Open Research. The first post about the mis-measurement problem considered issues with assessment. We now turn our attention to problems with authorship. Note that as before this is a topic of research in itself – and there is a rich vein of literature to be mined here for the interested observer.

Hyperauthorship

In May last year a high energy physics paper was published with over 5,000 authors. Of the 33 pages in this article, the paper occupied nine with the remainder listing the authors. This paper caused something of a storm of protest about ‘hyperauthorship’ (a term coined in 2001 by Blaise Cronin).

Nature published a news story on it, which was followed a week later by similar stories decrying the problem. The Independent published a story with the angle that many people are just coasting along without contributing. The Conversation’s take on the story looked at the challenge of effectively rewarding researchers. The Times Higher Education was a bit slower off the mark, in August publishing a story questioning whether mass authorship was destroying the credibility of papers.

This paper was featured in a keynote talk given at this year’s FORCE2016 conference. Associate Professor Cassidy Sugimoto from the School of Informatics and Computing, Indiana University Bloomington spoke about ‘Structural Disruptions in the Reward System of Science’ (video here). She noted that authorship is the coin of the realm the pivot point of the whole of the scientific system and this has resulted in the growth of authors listed on a paper.

Sugimoto asked: What does ‘authorship’ mean when there are more authors than words in a document? This type of mass authorship raises concerns about fraud and attribution. Who is responsible if something goes wrong?

The authorship ‘proxy for credit’ problem

Of course not all of those 5,000 people actually contributed to the writing of the article – the activity we would normally associate with the word ‘authorship’. Scientific authorship does not follow the logic of literary authorship because of the nature of what is being written about.

In 1998 Biagioli (who has literally written the book on Scientific Authorship or at least edited it) in a paper called ‘The Instability of Authorship: Credit and Responsibility in Contemporary Biomedicine’ said that “the kind of credit held by a scientific author cannot be exchanged for money because nature (or claims about it) cannot be a form of private property, but belongs in the public domain”.

Facts cannot be copyrighted. The inability to write for direct financial remuneration in academia has implications for responsibility (addressed further down), but first let’s look at the issue of academic credit.

When we say ‘author’ what do we mean in this context? Often people are named as ‘authors’ on a paper because their inclusion will help to have the paper accepted, or it is a token thanks for providing the grant funding for the work. These are practices referred to as ‘gift authorship‘ where co-authorship awarded to a person who has not contributed significantly to the study.

In an attempt to stop some of the more questionable practices above, the International Committee of Medical Journal Editors (ICMJE) has defined what it means to be an author which says authorship should be based on:

- a substantial contribution

- drafting the work

- giving final approval and

- agreeing to be accountable for the integrity of the work.

The problem, as we keep seeing, is that authorship on a publication is the only thing that counts for reward. This means that ‘authorship’ is used as a proxy for crediting people’s contribution to the study.

Identifying contributions

Listing all of the people who had something to do with a research project as ‘authors’ on the final publication fails to credit different aspects of the labour involved in the research. In an attempt to address this, PLOS asks for the different contributions by those named on a paper to be defined on articles, with their guidelines suggesting categories such as Data Curation, Methodology, Software, Formal Analysis and Supervision (amongst many).

Sugimoto has conducted some research to find what this reveals about what people are contributing to scientific labour. In an analysis of PLOS data on contributorship, her team showed that in most disciplines the labour was distributed. This means that often the person doing the experiment is not the person who is writing up the work. (I should note that I was rather taken aback by this when it arose in interviews I conducted for my PhD).

It is not particularly surprising that in the Arts, Humanities and Social Sciences that the listed ‘author’ is most often the person who wrote the paper. However in Clinical Medicine, Biomedicine or Biology very few authors are associated with the task of writing. (As an aside, the analysis found women are disproportionately likely to be doing the experimentation, and men are more likely to be authoring, conceiving experimentation or obtaining resources.)

So, would it not be better if rather than placing the only emphasis on authorship of journal articles in high impact journals, we were able to reward people for different contributions to the research?

And while everyone takes credit, not all people take responsibility.

Authorship – taking responsibility

It is not just the issue of the inability to copyright ‘facts of nature’ that makes copyright unusual in academia. The academic reward system works on the ‘academic gift principle’ – academics provide the writing, the editing and the peer review for free and do not expect payment. The ‘reward’ is academic esteem.

This arrangement can seem very odd to an outsider who is used to the idea of work for hire. But there are broader implications than what is perceived to be ‘fair’ – and these relate to accountability. It is much more difficult to sue a researcher for making incorrect statements than it is to sue a person who writes for money (like a journalist).

Let us take a short meander into the world of academic fraud. Possibly the biggest and certainly highly contentious case was Andrew Wakefield and the discredited (and retracted) claim that the MMR vaccine was associated with autism in children. This has been discussed at great length elsewhere – the latest study debunking the claim was published last year. Partly because of the way science is credited and copyright is handled, there were minimal repercussions for Wakefield. He is barred from practicing medicine in the UK, but enjoys a career on the talkback circuit in the US. Recently a film about the MMR claims, directed by Wakefield was briefly shown at the Tribeca film festival before protests saw it removed from the programme.

Another high profile case is Diedderik Stapel, a Dutch social psychologist who entirely fabricated his data over many years. Despite several doctoral students’ work being based on this data and over 70 articles having to be retracted there were no charges laid. The only consequence he faced was having his professorship stripped.

Sometimes the consequences of fraud are tragic. A Japanese stem cell researcher, Haruko Obokata, who fabricated her results had her PhD stripped from her. There were no criminal charges laid but her supervisor committed suicide and the funding for the centre she was working in was cut. The work had been published in Nature which then retracted the work and wrote some editorial about the situation.

The question of scientific accountability is so urgent that there was a call last year to criminalise scientific misconduct in this paper. Indeed things do seem to be changing slowly and there have been some high profile cases where scientific fraud has resulted in criminal charges being laid. A former University of Queensland academic is currently facing fraud related charges over his fabricated results from a study into Parkinson’s disease and multiple sclerosis. This time last year, Dong-Pyou Han, a former biomedical scientist at Iowa State University in Ames, was sentenced to 57 months for fabricating and falsifying data in HIV vaccine trials. Han has also been fined US$7.2 million. In both the cases the issue is the misuse of grant funding rather than publication of false results.

The combination of great ‘reward’ from publication in high profile journals and little repercussion (other than having that ‘esteem’ taken away) has proven to be too great a temptation for some.

Conclusion

The need to publish in high impact journals has caused serious authorship issues – resulting in huge numbers of authors on some papers because it is the only way to allocate credit. And there is very little in the way we reward researchers that adequately allows for calling researchers to take responsibility when something goes wrong, in some cases resulting in serious fraud.

The next instalment in this series will look at ‘reproducibility, retractions and retrospective hypotheses.

Rafael Carazo-Salas

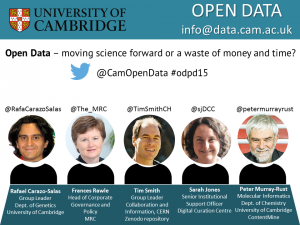

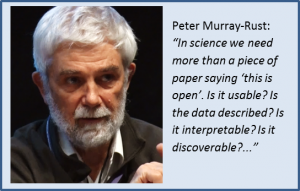

Rafael Carazo-Salas The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others.

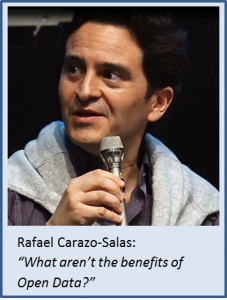

The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others. Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation.

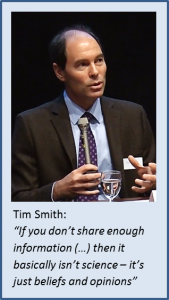

Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation. Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position.

Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position. Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions.

Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions. Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable.

Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable. The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.

The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.