During August the Research Councils UK on behalf of the UK Open Research Data Forum released a draft Concordat on Open Research Data for which they have sought feedback.

The Universities of Bristol, Cambridge, Manchester, Nottingham and Oxford prepared a joint response which was sent to the RCUK on 28 September 2015. The response is reproduced below in full.

The initial main focus of the Concordat should be good data management, instead of openness.

The purpose of the Concordat is not entirely clear. Merely issuing it is unlikely to ensure that data is made openly available. If Universities and Research Institutes are expected to publicly state their commitment to the Principles then they risk the dissatisfaction of their researchers if insufficient funds are available to support the data curation that is described. As discussed in the Comment #5 below, sharing research data in a manner that is useful and understandable requires putting research data management systems in place and having research data experts available from the beginning of the research process. Many researchers are only beginning to implement data management practices.It might be wiser to start with a Concordat on good data management before specifying expectations about open data. It would be preferable to first get to a point where researchers are comfortable with managing their data so that it is at least able to be citeable and discoverable. Once that is more common practice, then the openness of data can be expected as the default position.

The scope of the Concordat needs to be more carefully defined if it is to apply to all fields of research.

The Introduction states that the Concordat “applies to all fields of research” but it is not clear how the first sentence of the Introduction translates for researchers in the Arts and Humanities, (or in theoretical sciences, e.g. Mathematics). This sentence currently reads:

“Most researchers collect, measure, process and analyse data – in the form of sets of values of qualitative or quantitative variables – and use a wide range of hardware and software to assist them to do so as a core activity in the course of their research.”

The Arts and Humanities are mentioned in Principle #1, but this section also refers to benefits in terms of “progressing science”. We suggest that more input is sought specifically from academics in the Arts and Humanities, so that the wording throughout the Concordat is made more inclusive (or indeed exclusive, if appropriate).

The definition of research data in the Concordat needs to be relevant to all fields of research if the Concordat is to apply to all fields of research.

We suggest that the definition of data at the start of the document needs to be revised if it is to be inclusive of Arts and Humanities research (and theoretical sciences, e.g. Mathematics). The kinds of amendments that might be considered are indicated in italics:

“Research Data can be defined as evidence that underpins the answer to the research question, and can be used to validate findings regardless of its form (e.g. print, digital, or physical forms). These might be quantitative information or qualitative statements collected by researchers in the course of their work by experimentation, observation, interview or other methods, or information derived from existing evidence. Data may be raw or primary (e.g. direct from measurement or collection) or derived from primary data for subsequent analysis or interpretation (e.g. cleaned up or as an extract from a larger data set), or derived from existing sources where the copyright may be externally held. The purpose of open research data is not only to provide the information necessary to support or validate a research project’s observations, findings or outputs, but also to enable the societal and economic benefits of data reuse. Data may include, for example, statistics, collections of digital images, software, sound recordings, transcripts of interviews, survey data and fieldwork observations with appropriate annotations, an interpretation, an artwork, archives, found objects, published texts or a manuscript.

The Concordat should include a definition of open research data.

To enable consistent understanding across Concordat stakeholders, we suggest that the definition of research data at the start of the document be followed by a definition of “openness” in relation to the reuse of data and content.

To illustrate, consider referencing The Open Definition which includes the full Open Definition, and presents the most succinct formulation as:

“Open data and content can be freely used, modified, and shared by anyone for any purpose”.

The Concordat refers to a process at the end of the research lifecycle, when what actually needs to be addressed is the support processes required before that point to allow it to occur.

Principle #9 states that “Support for the development of appropriate data skills is recognised as a responsibility for all stakeholders”. This refers to the requirement to develop skills and provision of specialised researcher training. These skills are almost non-existent and training does not yet exist in any organised form (as noted by Jisc in March this year). There is some research data management training for librarians provided by the Digital Curation Centre (DCC) but little specific training for data scientists. The level of researcher support and training required across all disciplines to fulfil expectations outlined in Principle #9 will require a significant increase in both the infrastructure and staffing.

The implementation of, and integration between research data management systems (including systems external to institutions) is a complex process, and is an area of ongoing development across the UK research sector and will also take time for institutions to establish. This is reflected by the final paragraphs of DCC reports on the DCC RDM 2014 Survey and discussions around gathering researcher requirements for RDM infrastructure at the IDCC15 conference of March this year. It is also illustrated by a draft list of basic RDM infrastructure components developed through a Jisc Research Data Spring pilot.

The Concordat must acknowledge the distance between where the Higher Education research sector currently stands and the expectation laid out. While initial good progress towards data sharing and openness has been made in the UK, it will require further substantial culture change to enact the responsibilities laid out in Principle #1 of the Concordat, and this should be recognised within the document. There will be a significant time lag before staff are in place to support the research data management process through the lifecycle of research, so that the information is in a state that it can be shared at the end of the process.

We suggest that the introduction to the Concordat should include text to reflect this, such as:

“Sharing research data in a manner that is useful and understandable requires putting integrated research data management systems in place and having research data experts available from the beginning of the research process. There is currently a deficit of knowledge and skills in the area of research data management across the research sector in the UK. This Concordat is intended to establish a set of expectations of good practice with the goal of establishing open research data as the desired position over the long term. It is recognised that this Concordat describes processes and principles that will take time to establish within institutions.”

The Concordat should clarify more clearly its scope in relation to publicly funded research data and that funded from alternative sources or unfunded.

While the Introduction to the Concordat makes clear reference to publicly-funded research data, Principle #1 states that ‘it is the linking of data from a wide range of public and commercial bodies alongside the data generated by academic researchers’ that is beneficial. In addition, the ‘funders of research’ responsibilities should state whether these responsibilities relate only to public bodies, or wider (Principle #1).

The Concordat should propose sustainable solutions to fund the costs of the long-term preservation and curation of data, and how these costs can be borne by different bodies.

It is welcome that the Concordat states that costs should not fall disproportionately on a single part of the research community. However, currently the majority of costs are placed on the Higher Education Institutions (HEIs) which is not a sustainable position. There should be some clarification of how these costs could be met from elsewhere, for example research funders. In addition an acknowledgement that there will be a transition period where there may be little or no funding to support open data which will make it very difficult for HEIs to meet responsibilities in the short to medium term should be included. Furthermore, Principle #1 says that “Funders of Research will support open research data through the provision of appropriate resources as an acknowledged research cost.” It must be noted that several funders are at present reluctant or refusing to pay for the long-term preservation and curation of data.

The Concordat should propose solutions for paying for the cost of the long-term preservation and curation of data in cases where the ‘funders of research’ refuse to pay for this, or where research is unfunded. In the second paragraph of Principle #4 it is suggested that “…all parties should work together to identify the appropriate resource provider”. It would be useful to have some clarification about what the Working Group envisaged here. For example was it a shared national repository? Perhaps the RCUK (in collaboration with other UK funding bodies) could consider setting up a form of UK Data Service that meets the wider funding body audience for data of long-term value. This would also support the nature of collaboration and enable more re-use by increased data discoverability – data will not be stored at separate institutional repositories.

Additionally, there appears to be a contradiction between the statement in Principle 1 that “Funders of Research will support open research data through the provision of appropriate resources as an acknowledged research cost” and the statement in Principle #4: “…the capital costs for infrastructure may be incorporated into planned upgrades” which suggests that Universities or Research Institutes will need to fund infrastructure and services from capital and operational budgets.

The Concordat should clarify how an appropriate proportionality between costs and benefits might be assessed.

Principle #4 states that: “Such costs [of open research data] should be proportionate to real benefits.” This key relationship needs further amplification. How and at what stage can “real benefits” be determined in order to assess the proportionality of potential costs? The Concordat should state more clearly the ‘real and achievable’ benefits of open data with examples. What is the relationship between the costs and the benefits? Has this relationship been explored? The real benefits of sharing research data will only become clear over time. At the moment it is difficult to quantify the benefits without evidence from the open datasets. Moreover, there might be an amount of time after a project is finished before the real benefits are realised. Are public funders going to put in monetary support for such services?

Additionally, the Concordat should specify to what extent research data should be made easily re-usable by others. Currently Principle #3 mentions: “Open research data should also be prepared in such a manner that it is as widely useable as is reasonably possible…”. What is the definition of “reasonably possible”? Preparing data for use by others might be expensive, depending on the complexity of the data, and should be also taken into consideration when assessing the proportionality of potential costs of data sharing. Principle #4 states: “Both IT infrastructure costs and the on-going costs of training for researchers and for specialist staff, such as data curation experts, are expected to be significant over time.” These costs are indeed significant from the outset.

The Concordat (Principle #2) states: “A properly considered and appropriate research data management strategy should be in place before the research begins so that no data is lost or stored inappropriately. Wherever possible, project plans should specify whether, when and how data will be will be made openly available.” The Concordat should propose a process by which a proposal for data management and sharing in a particular research context is put forward for public funding. This proposal will need to include the cost-benefit-analysis for deciding which data to keep and distribute (and how best to keep and distribute it).

In general, the Concordat must balance open data requirements with allowing researchers enough time, and space to pursue innovation.

The Concordat should acknowledge the costs relating to undertaking regular reviews of progress towards open data.

Principle #4 refers to the following costs:

- “necessary costs – for IT infrastructure and services, administrative and specialist support staff, and for researchers’ time – are significant”

- “the additional and continuing revenue costs to sustain services – and rising volumes of data – for the long term are real and substantial”

- “Both IT infrastructure costs and the on-going costs of training for researchers and for specialist staff, such as data curation experts, are expected to be significant over time”

However, there is no explicit reference to costs relating to Principle #10 regarding “Regular reviews of progress towards open access to research data should be undertaken”.

We suggest that Principle #4 should include text to reflect this, and the kind of amendment that might be considered is indicated in italics:

For research organisations such as universities or research institutes, these costs are likely to be a prime consideration in the early stages of the move to making research data open. Both IT infrastructure costs and the on-going costs of training for researchers and for specialist staff, such as data curation experts, are expected to be significant over time. Significant costs will also arise from Principle #10 regarding the undertaking of regular reviews of progress towards open access to research data.

The Concordat should explore the establishment of a central organisation to lead the transformation towards a cohesive UK research data environment.

Principle #3 states: “Data must be curated […] This can be achieved in a number of ways […] However, these methodologies may vary according to subject and disciplinary fields, types of data, and the circumstances of individual projects. Hence the exact choice of methodology should not be mandated”.

Realising the benefits of curation may have significant costs where curation extends over the long term, such as data relating to nuclear science which may need to be usable for at least 60 years. These benefits would be best achieved, and in a cost-effective manner, through the establishment of a central organisation that will lead the creation of a cohesive national collection of research resources and a richer data environment that will:

- Make better use of the UK’s research outputs

- Enable UK researchers to easily publish, discover, access and use data

- Develop discipline-specific guidelines on data and metadata standards

- Suggest discipline-specific curation and preservation policies

- Develop protocols and processes for the access to restricted data

- Enable new and more efficient research

In Australia this capacity is provided by the Australian National Data Service.

The Concordat should address the issues around sharing research data resulting from collaborations, especially international collaborations.

It has to be explicitly recognised that some researchers will be involved in international collaborations, with collaborators who are not publicly funded, or whose funders to do not require research data sharing. Procedures (and possible exemptions) for sharing of research data in such circumstances should be discussed in the Concordat.

Additionally, the Concordat should suggest a sector-wide approach when considering the costs and complexities of research involving multiple institutions. Currently where multiple institutions are producing research data for one project there is a danger that it is deposited in multiple repositories which is neither pragmatic nor cost-effective.

Non-public funders need to be consulted about sharing of commercially-sponsored data, and the Concordat should acknowledge the possibility of restricting the access to research data resulting from commercial collaborations.

Since the Concordat makes recommendations with regards to making commercially-sponsored data accessible, significant conversation with non-public funders are needed. Otherwise, there is a risk that the expectations on industry are unlikely to be met. The current wording could damage industrial appetite to fund academic research if they are pushed towards openness without major consultation.

We also suggest that in the second paragraph of Principle #5, the sentence: “There is therefore a need to develop protocols on when and how data that may be commercially sensitive should be made openly accessible, taking account of the weight and nature of contributions to the funding of collaborative research projects, and providing an appropriate balance between openness and commercial incentives.” is changed to “There is therefore a need to develop protocols on whether, when and how data that may be commercially sensitive should be made openly accessible, taking account of the weight and nature of contributions to the funding of collaborative research projects, and providing an appropriate balance between openness and commercial incentives.” The Concordat should also recognise that development and execution of these processes is an additional burden on institutional administrative staff which must not be underestimated.

The Concordat should more generally recognise the increasing economic value of data produced by researchers.

Where commercial benefits can be quantified (such as the return on investment of a research project) this should be recognised as a reason to embargo access to data until such things as patents can be successfully applied. University bodies charged with the commercialization of research should be entitled to assess the potential value of research before consenting to data openness.

The Concordat should allow the use of embargo periods to allow release of data to be delayed up to a certain time after publication, where this is appropriate and justifiable.

The Concordat expects research data underpinning publications to be made accessible by the publication date (Principles #6 and #8). This does not, however, take into account disciplinary norms, where sometimes access to research data is delayed until a specified time after publication. For example, in crystallography (Protein Data Bank) the community has agreed a maximum 12-month delay between publishing the first paper on a structure and making coordinates public for secondary use. Delays in making data accessible are accepted by funders. For example, the BBSRC allows exemptions for disciplinary norms, and where best practices do not exist BBSRC suggests release within three years of generation of the dataset; the STFC expects research data from which the scientific conclusions of a publication are derived to be made available within six months of the date of the relevant publication. Research data should be discoverable at the time of publication, but it may be justifiable to delay access to the data.

The Concordat should make mention of the difficulties involved with ethical issues of data sharing, including issues around data licensing, and data use by others.

Ethical issues surrounding release and use of research data are briefly mentioned in Principle #5 and Principle #7. We believe the Concordat could benefit from expansion on the ethical issues surrounding release and use of research data, and advice on how these can be addressed in data sharing agreements. This is a large and complex area that would benefit from a national framework of best practice guidelines and methods of monitoring.

Furthermore, the Concordat does not provide any recommendations about research data licensing. This should be discussed together with issues about associated expertise required, costs and time. It is mentioned briefly above in point 4.

The Concordat’s stated expectations regarding the use of non-proprietary formats should be realistic.

Principle #3 states that:

“Open research data should also be prepared in such a manner that it is as widely useable as is reasonably possible, at least for specialists in the same or linked fields, wherever they are in the world. Any requirement to use specialised software or obscure data manipulations should be avoided wherever possible. Data should be stored in non-proprietary formats wherever possible, or the most commonly used proprietary formats if no equivalent non-proprietary format exists.”

The last two sentences of this paragraph could be regarded as unreasonable, depending on the definition of what is ‘possible’. It might theoretically be possible to convert data for storage but not remotely cost-effective. Other formulations (e.g. from EPSRC) talk about the burden of retrieval from stored formats being on the requester not the originator of the data.

We suggest that this section should be rephrased in-line with EPSRC recommendations, for example:

“Wherever possible, researchers are encouraged to store research data in non-proprietary formats. If this is not possible (or not cost-efficient), researchers should indicate what proprietary software is needed to process research data. Those requesting access to data are responsible for re-formatting it to suit their own research needs and for obtaining access to proprietary third party software that may be necessary to process the data.”

The Concordat should encourage proper management of physical samples and non-digital research data.

The Concordat should also encourage proper management of physical samples, and other forms of non-digital research data. Physical samples such as fossils, core samples, zoological and botanical samples, and non-digital research data such as recordings, papers notes, etc. should be also properly managed. In some areas the management and sharing of these items is well constructed and understood – for example, palaeontology journals will not allow people to publish without the specimen numbers from a museum – but it is less rigid in other areas of research. It would be desirable if the Concordat would encourage development of discipline-specific guidelines for management of physical samples and other non-digital research data.

Principle #5 must recognise the culture change required to remove the decision to share data from an individual researcher.

Principle #5 states that:

“‘Decisions on withholding data should not generally be made by individual researchers but rather through a verifiable and transparent process at an appropriate institutional level.”

Whilst the reasoning behind this Principle is understandable, it must recognise that we are not yet in a mature culture of data sharing and a statement removing data sharing decisions from the researcher will need changes in workflows and more importantly culture and autonomy of the researchers.

The idea that open research data should be formally acknowledged as a legitimate output of the research should form a separate principle.

The last paragraph of Principle #6 states that open research data should be acknowledged as a legitimate output of the research and that it “…should be accorded the same importance in the scholarly record as citations of other research objects, such as publications”. We strongly support this idea but recognise that this is a fundamental shift in working practices and policies. We are probably still several years off from seeing formal citation of datasets as an embedded practice for researchers and the development of products/services around the resulting metrics. This point is completely separate from the rest of Principle #6 and should form a principle in its own right.

Principle #2 must recognise that it may take significant resource for institutions to provide the infrastructure required for good data management.

While the focus of this Principle on good data management through the lifecycle, rather than the focus on open data sharing, is welcome, there are significant human, technical and sociotechnical developments required to meet this requirement; and also resources, in terms of people, time and infrastructure, that will be needed to shift to a mature position. These needs should be recognised in the Concordat.

The Concordat should clarify the reference to “other workers” in Principle #7

We would value some clarification on paragraph 3 of Principle #7 in relation to the reference to “other workers”: “Research organisations bear the primary responsibility for enforcing ethical guidelines and it is therefore vital that such guidelines are amended as necessary to make clear the obligations that are inherent in the use of data gathered by other workers.”

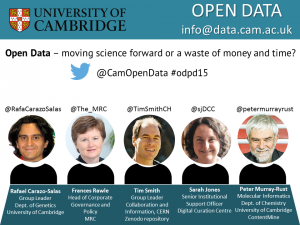

Rafael Carazo-Salas, Group Leader, Department of Genetics, University of Cambridge

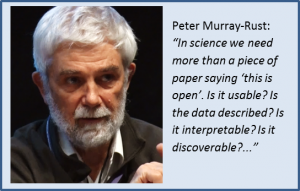

Rafael Carazo-Salas, Group Leader, Department of Genetics, University of Cambridge The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others.

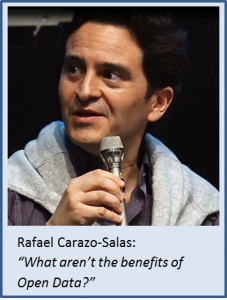

The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others. Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation.

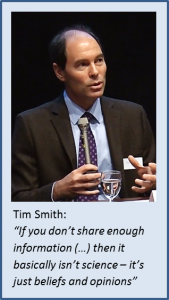

Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation. Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position.

Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position. Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions.

Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions. Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable.

Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable. The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.

The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.