Aaaaaaaaaaargh! was Mark Carden’s summary of the second annual Researcher to Reader conference, along with a plea that the different players show respect to one another. My take home messages were slightly different:

- Publishers should embrace values of researchers & librarians and become more open, collaborative, experimental and disinterested.

- Academic leaders and institutions should do their bit in combating the metrics focus.

- Big Deals don’t save libraries money, what helps them is the ability to cancel journals.

- The green OA = subscription cancellations is only viable in a utopian, almost fully green world.

- There are serious issues in the supply chain of getting books to readers.

- And copyright arrangements in academia do not help scholarship or protect authors*.

The programme for the conference included a mix of presentations, debates and workshops. The Twitter hashtag is #r2rconf.

As is inevitable in the current climate, particularly at a conference where there were quite a few Americans, the shadow of Trump was cast over the proceedings. There was much mention of the political upheaval and the place research and science has in this.

[*please see Kent Anderson’s comment at the bottom of this blog]

In the publishing corner

Time for publishers to raise to the challenge

The conference opened with an impassioned speech by Mark Allin, the President and CEO of John Wiley & Sons, who started with the statement this was “not a time for retreat, but a time for outreach and collaboration and to be bold”.

The talk was not what was expected from a large commercial publisher. Allin asked: “How can publishers act as advocates for truth and knowledge in the current political climate?” He mentioned that Proquest has launched a displaced researchers programme in reaction to world events, saying, “it’s a start but we can play a bigger role”.

Allin asked what publishers can do to ensure research is being accessed. Referencing “The content trap” by Bharat Anand, Allin said “We won’t as a media industry survive as a media content and putting it in a bottle and controlling its distribution. We will only succeed if we connect the users. So we need to re-engineer the workflows making them seamless, frictionless. “We should be making sure that … we are offering access to all those who want it.”

Allin raised the issue of access, noting that ResearchGate has more usage than any single publisher. He made the point that “customers don’t care if it is the version of record, and don’t care about our arcane copyright laws”. This is why people use SciHub, it is ease of access. He said publishers should not give up protecting copyright but must realise its limitations and provide easy access.

Researchers are the centre of gravity – we need to help them spend more time researching and less time publishing, he says. There is a lesson here, he noted, suppliers should use “the divine discontent of the customer as their north star”. He used the example of Amazon to suggest people working in scholarly communication need to use technology much better to connect up. “We need to experiment more, do more, fail more, be more interconnected” he said, where “publishing needs open source and open standards” which are required for transformational impact on scholarly publishing – “the Uber equivalent”.

His suggestion for addressing the challenges of these sharing platforms is to “try and make your experience better than downloading from a pirate site”, and that this would be a better response than taking the legal route and issuing takedown notices. He asked: “Should we give up? No, but we need to recognise there are limits. We need to do more to enable access.”

Allin called the situation, saying publishing may have gone online but how much has the internet really changed scholarly communication practices? The page is still a unit of publishing, even in digital workflows. It shouldn’t be, we should have a ‘digital first’ workflow. The question isn’t ‘what should the workflow look like?’, but ‘why hasn’t it improved?’, he said, noting that innovation is always slowed by social norms not technology. Publishers should embrace values of researchers & librarians and become more open, collaborative, experimental and disinterested.

So what do publishers do?

Publishers “provide quality and stability”, according to Kent Anderson, speaking on the second day (no relation to Rick Anderson) in his presentation about ‘how to cook up better results in communicating research’. Anderson is the CEO of Redlink, a company that provides publishers and libraries with analytic and usage information. He is also the founder of the blog The Scholarly Kitchen.

Anderson made the argument that “publishing is more than pushing a button”, by expanding on his blog on ‘96 things publishers do’. This talk differed from Allin’s because it focused on the contribution of publishers.

Anderson talked about the peer review process, noting that rejections help academics because usually they are about mismatch. He said that articles do better in the second journal they’re submitted to.

During a discussion about submission fees, Anderson noted that these “can cover the costs of peer review of rejected papers but authors hate them because they see peer review as free”. His comment that a $250 journal submission charge with one journal is justified by the fact that the target market (orthopaedic surgeons) ‘are rich’ received (rather unsurprisingly) some response from the audience via Twitter.

Anderson also made the accusation that open access publishers take lower quality articles when money gets tight. This did cause something of a backlash on the Twitter discussion with a request for a citation for this statement, a request for examples of publishers lowering standards to bring in more APC income with the exception of scam publishers. [ADDENDUM: Kent Anderson below says that this was not an ‘accusation’ but an ‘observation’. The Twitter challenge for ‘citation please?’ holds.]

There were a couple of good points made by Anderson. He argued that one of the value adds that publishers do is training editors. This is supported by a small survey we undertook with the research community at Cambridge last year which revealed that 30% of the editors who responded felt they needed more training.

The library corner

The green threat

There is good reason to expect that green OA will make people and libraries cancel their subscriptions, at least it will in the utopian future described by Rick Anderson (no relation to Kent Anderson), Associate Dean of University of Utah in his talk “The Forbidden Forecast, Thinking about open access and library subscriptions”.

Anderson started by asking why, if we’re in a library funding crisis, aren’t we seeing sustained levels of unsubscription? He then explained that Big Deals don’t save libraries money. They lower the cost per article, but this is a value measure, not a cost measure. What the Big Deal did was make cancellations more difficult. Most libraries have cancelled every journal that they can without Faculty ‘burning down the library’, to preserve the Big Deal. This explains the persistence of subscriptions over time. The library is forced to redirect money away from other resources (books) and into serials budget. The reason we can get away with this is because books are not used much.

The wolf seems to be well and truly upon us. There have been lots of cancellations and reduction of library budgets in the USA (a claim supported by a long list of examples). The number of cancellations grows as the money being siphoned off book budgets runs out.

Anderson noted that the emergence of new gold OA journals doesn’t help libraries, this does nothing to relieve the journal emergency. They just add to the list of costs because it is a unique set of content. What does help libraries is the ability to cancel journals. Professor Syun Tutiya, Librarian Emeritus at Chiba University in a separate session noted that if Japan were to flip from a fully subscription model to APCs it would be about the same cost, so that would solve the problem.

Anderson said that there is an argument that “there is no evidence that green OA cancels journals” (I should note that I am well and truly in this camp, see my argument). Anderson’s argument that this is saying the future hasn’t happened yet. The implicit argument here is that because green OA has not caused cancellations so far means it won’t do it into the future.

Library money is taxpayers’ money – it is not always going to flow. There is much greater scrutiny of journal big deals as budgets shrink.

Anderson argued that green open access provides inconsistent and delayed access to copies which aren’t always the version of record, and this has protected subscriptions. He noted that Green OA is dependent on subscription journals, which is “ironic given that it also undermines them”. You can’t make something completely & freely available without undermining the commercial model for that thing, Anderson argued.

So, Anderson said, given green OA exists and has for years, and has not had any impact on subscriptions, what would need to happen for this to occur? Anderson then described two subscription scenarios. The low cancellation scenario (which is the current situation) where green open access is provided sporadically and unreliably. In this situation, access is delayed by a year or so, and the versions available for free are somewhat inferior.

The high cancellation scenario is where there is high uptake of green OA because there are funder requirements and the version is close to the final one. Anderson argued that the “OA advocates” prefer this scenario and they “have not thought through the process”. If the cost is low enough of finding which journals have OA versions and the free versions are good enough, he said, subscriptions will be cancelled. The black and white version of Anderson’s future is: “If green OA works then subscriptions fail, and the reverse is true”.

Not surprisingly I disagreed with Anderson’s argument, based on several points. To start, there would need to have a certain percentage of the work available before a subscription could be cancelled. Professor Syun Tutiya, Librarian Emeritus at Chiba University noted in a different discussion that in Japan only 6.9% of material is available Green OA in repositories and argued that institutional repositories are good for lots of things but not OA. Certainly in the UK, with the strongest open access policies in the world, we are not capturing anything like the full output. And the UK is itself only 6% of the research output for the world, so we are certainly a very long way away from this scenario.

In addition, according to work undertaken by Michael Jubb in 2015 – most of the green Open Access material is available in places other than institutional repositories, such as ResearchGate and SciHub. Do librarians really feel comfortable cancelling subscriptions on the basis of something being available in a proprietary or illegal format?

The researcher perspective

Stephen Curry, Professor of Structural Biology, Imperial College London, spoke about “Zen and the Art of Research Assessment”. He started by asking why people become researchers and gave several reasons: to understand the world, change the world, earn a living and be remembered. He then asked how they do it. The answer is to publish in high impact journals and bring in grant money. But this means it is easy to lose sight of the original motivations, which are easier to achieve if we are in an open world.

In discussing the report published in 2015, which looked into the assessment of research, “The Metric Tide“, Curry noted that metrics & league tables aren’t without value. They do help to rank football teams, for example. But university league tables are less useful because they aggregate many things so are too crude, even though they incorporate valuable information.

Are we as smart as we think we are, he asked, if we subject ourselves to such crude metrics of achievement? The limitations of research metrics have been talked about a lot but they need to be better known. Often they are too precise. For example was Caltech really better than University of Oxford last year but worse this year?

But numbers can be seductive. Researchers want to focus on research without pressure from metrics, however many Early Career Researchers and PhD students are increasingly fretting about publications hierarchy. Curry asked “On your death bed will you be worrying about your H-Index?”

There is a greater pressure to publish rather than pressure to do good science. We should all take responsibility to change this culture. Assessing research based on outputs is creating perverse incentives. It’s the content of each paper that matters, not the name of the journal.

In terms of solutions, Curry suggested it would be better to put higher education institutions in 5% brackets rather than ranking them 1-n in the league tables. Curry calls for academic leaders and institutions to do their bit in combating the metrics focus. He also called for much wider adoption of the Declaration On Research Assessment (known as DORA). Curry’s own institution, Imperial College London, has done so recently.

Curry argued that ‘indicators’ would be a more appropriate term than ‘metrics’ in research assessment because we’re looking at proxies. The term metrics imply you know what you are measuring. Certainly metrics can inform but they cannot replace judgement. Users and providers must be transparent.

Another solution is preprints, which shift attention from container to content because readers use the abstract not the journal name to decide which papers to read. Note that this idea is starting to become more mainstream with the research by the NIH towards the end of last year “Including Preprints and Interim Research Products in NIH Applications and Reports”

Copyright discussion

I sat on a panel to discuss copyright with a funder – Mark Thorley, Head of Science Information, Natural Environment Research Council , a lawyer – Alexander Ross, Partner, Wiggin LLP and a publisher – Dr Robert Harington, Associate Executive Director, American Mathematical Society.

My argument** was that selling or giving the copyright to a third party with a purely commercial interest and that did not contribute to the creation of the work does not protect originators. That was the case in the Kookaburra song example. It is also the case in academic publishing. The copyright transfer form/publisher agreement that authors sign usually mean that the authors retain their moral rights to be named as the authors of the work, but they sign away rights to make any money out of them.

I argued that publishers don’t need to hold the copyright to ensure commercial viability. They just need first exclusive publishing rights. We really need to sit down and look at how copyright is being used in the academic sphere – who does it protect? Not the originators of the work.

Judging by the mood in the room, the debate could have gone on for considerably longer. There is still a lot of meat on that bone. (**See the end of this blog for details of my argument).

The intermediary corner

The problem of getting books to readers

There are serious issues in the supply chain of getting books to readers, according to Dr Michael Jubb, Independent Consultant and Richard Fisher from Something Understood Scholarly Communication.

The problems are multi-pronged. For a start, discoverability of books is “disastrous” due to completely different metadata standards in the supply chain. ONIX is used for retail trade and MARC is standard for libraries, Neither has detailed information for authors, information about the contents of chapters, sections etc, or information about reviews and comments.

There are also a multitude of channels for getting books to libraries. There has been involvement in the past few years of several different kinds of intermediaries – metadata suppliers, sales agents, wholesalers, aggregators, distributors etc – who are holding digital versions of books that can be supplied through the different type of book platforms. Libraries have some titles on multiple platforms but others only available on one platform.

There are also huge challenges around discoverability and the e-commerce systems, which is “too bitty”. The most important change that has happened in books has been Amazon, however publisher e-commerce “has a long way to go before it is anything like as good as Amazon”.

Fisher also reminded the group that there are far more books published each year than there are journals – it’s a more complex world. He noted that about 215 [NOTE: amended from original 250 in response to Richard Fisher’s comment below] different imprints were used by British historians in the last REF. Many of these publishers are very small with very small margins.

Jubb and Fisher both emphasised readers’ strong preference for print, which implies that much more work needed on ebook user experience. There are ‘huge tensions’ between reader preference (print) and the drive for e-book acquisition models at libraries.

The situation is probably best summed up in the statement that “no-one in the industry has a good handle on what works best”.

Providing efficient access management

Current access control is not functional in the world we live in today. If you ask users to jump through hoops to get access off campus then your whole system defeats its purpose. That was the central argument of Tasha Mellins-Cohen, the Director of Product Development, HighWire Press when she spoke about the need to improve access control.

Mellins-Cohen started with the comment “You have one identity but lots of identifiers”, and noted if you have multiple institutional affiliations this causes problems. She described the process needed for giving access to an article from a library in terms of authentication – which, as an aside, clearly shows why researchers often prefer to use Sci Hub.

She described an initiative called CASA – Campus Activated Subscriber-Access which records devices that have access on campus through authenticated IP ranges and then allows access off campus on the same device without using a proxy. This is designed to use more modern authentication. There will be “more information coming out about CASA in the next few months”.

Mellins-Cohen noted that tagging something as ‘free’ in the metadata improves Google indexing – publishers need to do more of this at article level. This comment was responded with a call out to publishers to make the information about sharing more accessible to authors through How Can I Share It?

Mellins-Cohen expressed some concern that some of the ideas coming out of RA21 Resource Access in 21st Century, an STM project to explore alternatives to IP authentication, will raise barriers to access for researchers.

Summary

It is always interesting to have the mix of publishers, intermediaries, librarians and others in the scholarly communication supply chain together at a conference such as this. It is rare to have the conversations between different stakeholders across the divide. In his summary of the event, Mark Carden noted the tension in the scholarly communication world, saying that we do need a lively debate but also need to show respect for one another.

So while the keynote started promisingly, and said all the things we would like to hear from the publishing industry, there is still the reality that we are not there yet. And this underlines the whole problem. This interweb thingy didn’t happen last week. What has actually happened to update the publishing industry in the last 20 years? Very little it seems. However it is not all bad news. Things to watch out for in the near future include plans for micro-payments for individual access to articles, according to Mark Allin, and the highly promising Campus Activated Subscriber-Access system.

Danny Kingsley attended the Researcher to Reader conference thanks to the support of the Arcadia Fund, a charitable fund of Lisbet Rausing and Peter Baldwin.

Published 27 February 2017

Written by Dr Danny Kingsley

Copyright case study

In my presentation, I spoke about the children’s campfire song, “Kookaburra sits in the old gum tree” which was written by Melbourne schoolteacher Marion Sinclair in 1932 and first aired in public two years later as part of a Girl Guides jamboree in Frankston. Sinclair had to get prompted to go to APRA (Australasian Performing Right Association) to register the song. That was in 1975, the song had already been around for 40 years but she never expressed any great interest in any propriety to the song.

In 1981 the Men at Work song “Down Under” made No. 1 in Australia. The song then topped the UK, Canada, Ireland, Denmark and New Zealand charts in 1982 and hit No.1 in the US in January 1983. It sold two million copies in the US alone. When Australia won the America’s Cup in 1983 Down Under was played constantly. It seems extremely unlikely that Marion Sinclair did not hear this song. (At the conference, three people self-identified as never having heard the song when a sample of the song was played.)

Marion Sinclair died in 1988, the song went to her estate and Norman Lurie, managing director of Larrikin Music Publishing, bought the publishing rights from her estate in 1990 for just $6100. He started tracking down all the chart music that had been printed all over the world, because Kookaburra had been used in books for people learning flute and recorder.

In 2007 TV show Spicks and Specks had a children’s music themed episode where the group were played “Down Under” and asked which Australian nursery rhyme the flute riff was based on. Eventually they picked Kookaburra, all apparently genuinely surprised when the link between the songs was pointed out. There is a comparison between the music pieces.

Two years later Larrikin Music filed a lawsuit, initially wanting 60% of Down Under’s profits. In February 2010, Men at Work appealed, and eventually lost. The judge ordered Men at Work’s recording company, EMI Songs Australia, and songwriters Colin Hay and Ron Strykert to pay 5% of royalties earned from the song since 2002 and from its future earnings.

In the end, Larrikin won around $100,000, although legal fees on both sides have been estimated to be upwards $4.5 million, with royalties for the song frozen during the case.

Gregory Ham was the flautist in the band who played the riff. He did not write Down Under, and was devastated by the high profile court case and his role in proceedings. He reportedly fell back into alcohol abuse and was quoted as saying: “I’m terribly disappointed that’s the way I’m going to be remembered — for copying something.” Ham died of a heart attack in April 2012 in his Carlton North home, aged 58, with friends saying the lawsuit was haunting him.

This case, I argued, exemplifies everything that is wrong with copyright.

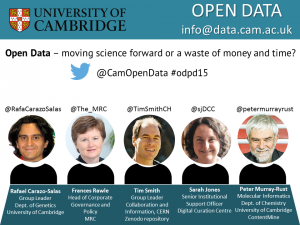

Rafael Carazo-Salas

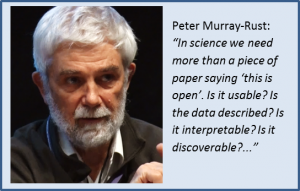

Rafael Carazo-Salas The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others.

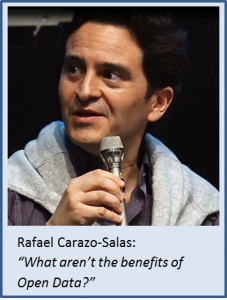

The discussion started off with a request for a definition of what “open” meant. Both Peter and Sarah explained that ‘open’ in science was not simply a piece of paper saying ‘this is open’. Peter said that ‘open’ meant free to use, free to re-use, and free to re-distribute without permission. Open data needs to be usable, it needs to be described, and to be interpretable. Finally, if data is not discoverable, it is of no use to anyone. Sarah added that sharing is about making data useful. Making it useful also involves the use of open formats, and implies describing the data. Context is necessary for the data to be of any value to others. Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation.

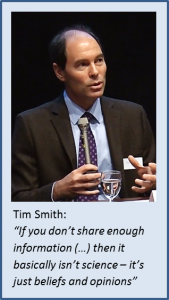

Next came a quick question from Danny: “What are the benefits of Open Data”? followed by an immediate riposte from Rafael: “What aren’t the benefits of Open Data?”. Rafael explained that open data led to transparency in research, re-usability of data, benchmarking, integration, new discoveries and, most importantly, sharing data kept it alive. If data was not shared and instead simply kept on the computer’s hard drive, no one would remember it months after the initial publication. Sharing is the only way in which data can be used, cited, and built upon years after the publication. Frances added that research data originating from publicly funded research was funded by tax payers. Therefore, the value of research data should be maximised. Data sharing is important for research integrity and reproducibility and for ensuring better quality of science. Sarah said that the biggest benefit of sharing data was the wealth of re-uses of research data, which often could not be imagined at the time of creation. Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position.

Tim also stressed that if open science became institutionalised, and mandated through policies and rules, it would take a very long time before individual researchers would fully embrace it and start sharing their research as the default position. Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions.

Frances mentioned that in the case of personal and sensitive data, sharing was not as simple as in basic sciences disciplines. Especially in medical research, it often required provision of controlled access to data. It was not only important who would get the data, but also what they would do with it. Frances agreed with Tim that perhaps what was needed is a paradigm shift – that questions should be sent to the data, and not the data sent to the questions. Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable.

Danny mentioned that the traditional way of rewarding researchers was based on publishing and on journal impact factors. She asked whether publishing data could help to start rewarding the process of generating data and making it available. Sarah suggested that rather than having the formal peer review of data, it would be better to have an evaluation structure based on the re-use of data – for example, valuing data which was downloadable, well-labelled, re-usable. The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.

The final discussion was around incentives for data sharing. Sarah was the first one to suggest that the most persuasive incentive for data sharing is seeing the data being re-used and getting credit for it. She also stated that there was also an important role for funders and institutions to incentivise data sharing. If funders/institutions wished to mandate sharing, they also needed to reward it. Funders could do so when assessing grant proposals; institutions could do it when looking at academic promotions.