Growing up, a diet of B-grade movies gave the impression of American summer camps as places where teenagers undertake a series of slapstick events in the wilderness. That may indeed be the case sometimes, but at the University of California San Diego campus recently, a group of decidedly older people bunked in together for a completely different type of summer camp.

The inaugural FORCE11 Scholarly Communications Institute (FSCI) was held in the first week of August, bringing together librarians, researchers and administrators from around the world. The event was planned as a week long intensive summer school on improving research communication. The activities were spread all over the campus, although not, unfortunately in the mother of all spaceships for a library.

The inaugural FORCE11 Scholarly Communications Institute (FSCI) was held in the first week of August, bringing together librarians, researchers and administrators from around the world. The event was planned as a week long intensive summer school on improving research communication. The activities were spread all over the campus, although not, unfortunately in the mother of all spaceships for a library.

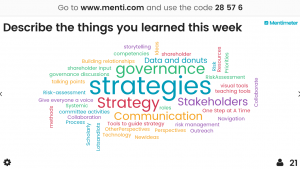

The event hashtag was #FSCI and the specific hashtag for the course, “Building an Open and Information Rich Institution” I ran with Sarah Shreeves from University of Miami was #FSCIAM3. This blog is a brief run down of what we covered in the course.

Our course

We had a wonderful group of people, primarily from the library sector, and from around the world (although many were working in American universities).

We had a wonderful group of people, primarily from the library sector, and from around the world (although many were working in American universities).

From the delivery perspective this was an intense experience requiring 14 hours of delivery plus the documentation and follow up each day. It was further complicated by the fact that Sarah and I met for first time in person half an hour before delivery on the Monday.

Working within open and F.A.I.R principles, we have made all of our resources and information available and links to all the Google documents are included in this blog post.The shared Google Drive has links to everything. These presentations will be uploaded to the FSCI Zenodo site when it is available. In addition the group created a Zotero page which collects together relevant links and resources as they arose in discussion.

Monday – Problem definition

Using an established process the group worked together to define the problems we were looking to address in scholarly communication:

Using an established process the group worked together to define the problems we were looking to address in scholarly communication:

- OA takes time and money – and the tools are annoying.

- We need to reduce complexity – make it easy administratively

- It is important to recognise difference – one size does not fit all, there are cultural and country norms in publishing and prestige

- Motivation – what are the incentives? How can we demonstrate benefit?

- There is a need for advocacy and training of various stakeholders including within library

- We can demonstrate the repository as a free way of publishing with impact tracking – for both the author and the institution.

- Whose responsibility is this?

The slides from the first day (including the workings of the group) are available.

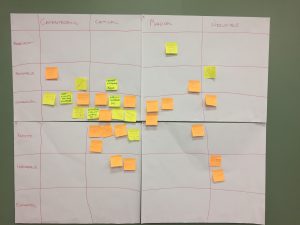

Tuesday – Stakeholder mapping

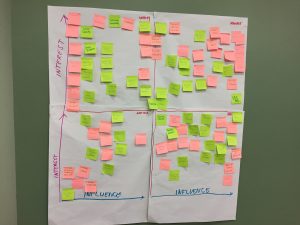

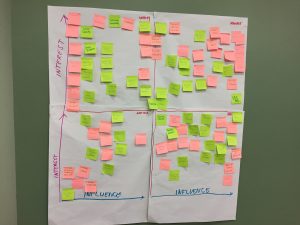

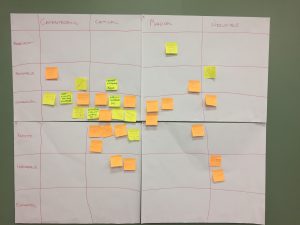

On the second day we discussed the different stakeholders in institutions and external to institutions in this space. Each table created a pile of post it notes which were then classified on a large grid on the wall against ‘interest’ versus ‘influence’. We then discussed which stakeholders we needed to work with, and whether it is possible to move the stakeholder from one of the quadrants into another. We also discussed the value in using some stakeholders to reach others.

On the second day we discussed the different stakeholders in institutions and external to institutions in this space. Each table created a pile of post it notes which were then classified on a large grid on the wall against ‘interest’ versus ‘influence’. We then discussed which stakeholders we needed to work with, and whether it is possible to move the stakeholder from one of the quadrants into another. We also discussed the value in using some stakeholders to reach others.

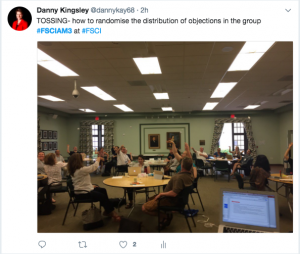

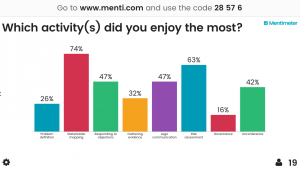

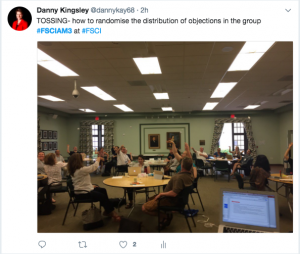

A second exercise we ran was ‘responding to objections’ – where we gave the group a few minutes to create objections that different stakeholders may have to aspects of scholarly communication. These were then randomised and the group had only a couple of minutes to develop an ‘elevator pitch’ to respond to that objection. The slides from day 2 incorporate the comments, objections and counter arguments.

Wednesday – Communication

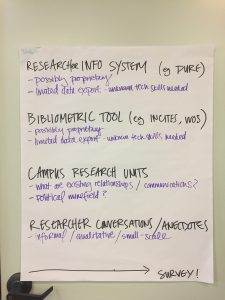

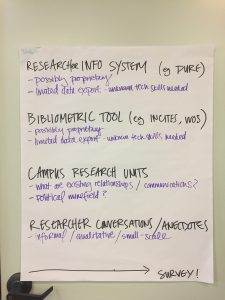

We started the day with a ‘gathering evidence’ exercise that consisted of a series of questions that were allocated to each table to discuss with a view to the kind of information held in an institution that might be helpful to answer it. Examples of the type of questions we asked the group to consider are: How do we better understand and communicate with the range of disciplines on campus? (with a goal of creating advocacy materials that support the range of disciplinary needs of the institution) or Who is doing collaborative research with others on campus and with others outside of the university? Is there interdisciplinary research? (with a goal of creating a map of collaborations on campus).

We started the day with a ‘gathering evidence’ exercise that consisted of a series of questions that were allocated to each table to discuss with a view to the kind of information held in an institution that might be helpful to answer it. Examples of the type of questions we asked the group to consider are: How do we better understand and communicate with the range of disciplines on campus? (with a goal of creating advocacy materials that support the range of disciplinary needs of the institution) or Who is doing collaborative research with others on campus and with others outside of the university? Is there interdisciplinary research? (with a goal of creating a map of collaborations on campus).

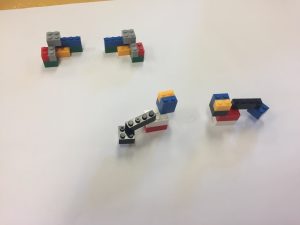

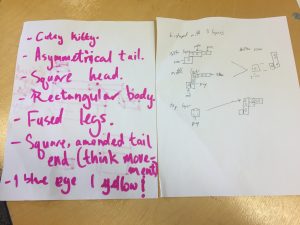

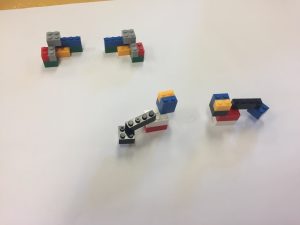

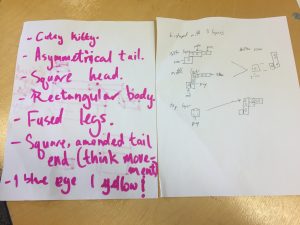

We moved to an exercise to demonstrate the need for clear communication. People worked in pairs and had a pile of building bricks which they were asked to build a shape from. They then had five minutes to describe their shape. After this the instructions were swapped and the opposite pair tried to reproduce the shape from the instructions.

We moved to an exercise to demonstrate the need for clear communication. People worked in pairs and had a pile of building bricks which they were asked to build a shape from. They then had five minutes to describe their shape. After this the instructions were swapped and the opposite pair tried to reproduce the shape from the instructions. The results were surprising – with fewer than 50% of shapes reproduced. However, looking at some of the instructions, things became clearer. Note the description ‘cute kitty’ in these instructions.

The results were surprising – with fewer than 50% of shapes reproduced. However, looking at some of the instructions, things became clearer. Note the description ‘cute kitty’ in these instructions.

The final session on day three was a risk assessment exercise where we put up the proposal ‘that we will make all digitised older theses open access without obtaining permission from the authors’. The tables were asked to come up with potential risks that could arise from this proposal, and then asked to map these onto a grid that considered the likelihood and severity of each risk.

The final session on day three was a risk assessment exercise where we put up the proposal ‘that we will make all digitised older theses open access without obtaining permission from the authors’. The tables were asked to come up with potential risks that could arise from this proposal, and then asked to map these onto a grid that considered the likelihood and severity of each risk.

Then the group discussed what could be done to mitigate the risks they identified, and then determine if the risk could then be moved within the grid. Again, all discussions are captured in the slides.

Thursday – Governance

On the Thursday we considered matters of governance. Dominic Tate from Edinburgh talked the group through the management structure at his institution, and how they have managed to create a strong decision making governance.

Using a system of mapping organisational structure to the decision structure, the group identified a goal they would like to achieve at their workplace and then to consider the aspects that are Strategic, Tactical and Operational. They then identified the person/people/group that will need to agree at each of these stages to achieve the end goal, and whether this was something that could be managed within the immediate organisation or does it involve the wider institution. We also discussed whether policies would need to be changed or created, and the level of consultation needed. The slides describe the process.

and whether this was something that could be managed within the immediate organisation or does it involve the wider institution. We also discussed whether policies would need to be changed or created, and the level of consultation needed. The slides describe the process.

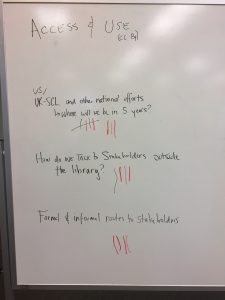

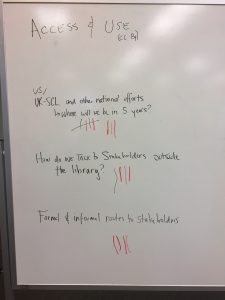

At the end of this day we broke into two groups for an unconference. One group discussed the UK Scholarly Communication Licence, the other continued on the governance discussion by identifying stakeholders and working out how to approach them.

Friday – The future

On the last day we discussed the best way to share stories with the relevant stakeholders – what is the best way to present the information? How do you get it to the person?

We then looked to the future, first by considering big disruptor technologies on the last 20 years. We asked people to share their work experiences before these technologies existed, to give us an idea of how much things will potentially change into the future with the next big disruptors. We then asked individuals to identify future issues that they will need to address at their institution, which they then sorted at the table level before we did a group consolidation to identify what the issues will be.

We then looked to the future, first by considering big disruptor technologies on the last 20 years. We asked people to share their work experiences before these technologies existed, to give us an idea of how much things will potentially change into the future with the next big disruptors. We then asked individuals to identify future issues that they will need to address at their institution, which they then sorted at the table level before we did a group consolidation to identify what the issues will be.

Each group chose one of these issues/futures, and in a mini overview of the work we had done throughout the week, they undertook a stakeholder assessment – who would they have to engage to make this happen? They also identified the governance structures in place, and the type of information they would want in place to make decisions about moving in this area. Sone of the discussions are captured in the slides.

Assessment of the course

When developing the course we articulated what we hoped the participants would get out of the week. These included the ability to:

- Think strategically and comprehensively about openness and their institution

- Articulate the ‘why’ of openness for a variety of stakeholders within an institution

- Articulate how information related to research and outputs flows through an institution and understand challenges to this flow of information

- Understand the practicalities of delivering open access to research outputs and research data management within an institution

- Consider the technology, expertise, and resources required to support open research

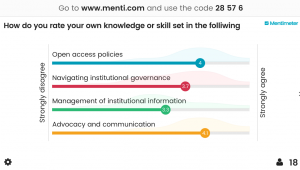

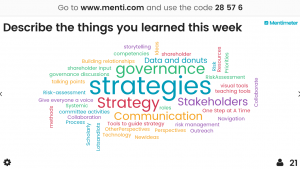

So how did we do? Well according to the feedback at the beginning and end of the week we certainly hit all the targets the participants identified.

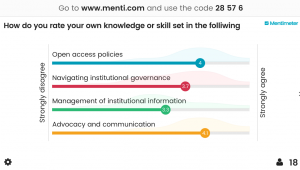

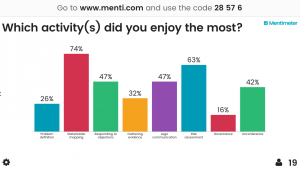

The responses at the beginning of the week were:

And the feedback at the end of the week was:

Interestingly, the Governance session was the least popular session we ran, but it rated extremely highly in the areas the participants self identified as learning about.

Interestingly, the Governance session was the least popular session we ran, but it rated extremely highly in the areas the participants self identified as learning about.

Several people went out of their way to tell Sarah and I that ‘this was the best training/workshop I have ever done’ which is very high praise.

On the Friday afternoon all of the participants for FSCI got back together to provide feedback about what happened in their courses. These ranged from an explanation of what people did, to participants describing what they knew, to poems. There was no expressionist dance unfortunately (perhaps next year). Sarah and I chose to describe our week in pictures.

Wrap of the week

While it was slightly disorienting spending a week in student accommodation, overall this was a valuable and rewarding experience – if extremely intense. Our group of just over 120 people was only one of several ‘camps’ happening at the same time, including electronic music and programming groups. We all converged on the dining hall each meal, a big hodge podge of people.

The largest and most intrusive group was the teenagers at the San Diego District Police camp. This is a para military organisation, we discovered. This did go some way to explain the line ups at 6am and also at 9pm, the groups shouting their responses in unison, and the instructors wandering around with guns on their hips.

On a much more peaceful note, San Diego is where Dr Seuss lived, and looking at the vegetation and landscape it is easy to see where his inspiration originated.

Published 22 August 2017

Written by Dr Danny Kingsley